AI Heatmaps Won’t Save Your UX Strategy

AI heatmaps UX optimisation has become the latest buzzword in conversion rate optimisation circles. Every week, a new tool promises to revolutionise how we understand user behaviour through machine learning-powered click maps, scroll depth analysis, and attention predictions. But here’s the uncomfortable truth: AI heatmaps can’t fix fundamentally broken user experiences—they simply provide more sophisticated ways to visualise existing problems.

As e-commerce professionals, we’ve become seduced by the promise of artificial intelligence solving our conversion challenges. AI-powered analytics tools create stunning visualisations that look impressive in stakeholder presentations. They predict where users might click, identify potential friction points, and generate colour-coded overlays that appear scientifically rigorous. Yet beneath the attractive interface lies a critical limitation: AI heatmaps are diagnostic tools, not solutions.

The real danger isn’t the technology itself—it’s the misplaced confidence it creates. When store owners see an AI-generated heatmap showing 78% of users clicking above the fold, they assume they’ve found actionable insights. They haven’t. They’ve simply confirmed that users behave like users. Without addressing underlying UX architecture problems, trust deficits, or confused information hierarchies, those beautiful heatmaps become expensive vanity metrics that delay real conversion optimisation work.

The AI Heatmap Hype Cycle Explained

The analytics industry has positioned AI heatmaps as the natural evolution of traditional session recording tools. Marketing materials promise predictive user behaviour analysis without the need for significant traffic volumes. For Shopify merchants with modest visitor numbers, this sounds transformative.

Machine learning models trained on millions of anonymised sessions can theoretically predict interaction patterns on your site. The technology interpolates data from similar page structures, creating heatmaps before you’ve accumulated statistically significant user interactions.

However, this predictive approach introduces a fundamental flaw: the AI assumes your site structure resembles best practices. If your product pages have unconventional layouts, confusing navigation, or trust elements buried below key calls-to-action, the AI’s predictions may bear little resemblance to actual user behaviour.

The hype cycle follows a predictable pattern. Early adopters implement AI heatmap tools, generate impressive reports, and share vanity metrics in case studies. Others adopt the technology expecting similar results, only to discover that seeing where users don’t click doesn’t automatically explain why or prescribe fixes.

What AI Heatmaps Actually Measure

Traditional heatmaps record genuine user interactions—clicks, taps, mouse movements, and scroll depth. They’re descriptive analytics showing what happened during actual sessions. AI heatmaps introduce a predictive layer that estimates probable behaviour based on pattern recognition.

These tools typically measure:

- Predicted attention zones using eye-tracking research datasets

- Clickability predictions based on element prominence and positioning

- Engagement forecasts derived from historical interaction data across similar sites

- Scroll probability mapping showing where users likely stop reading

The distinction matters enormously for conversion optimisation. When you’re making decisions about button placement, content hierarchy, or trust badge positioning, you need to know whether you’re responding to actual user behaviour or algorithmic assumptions.

The Statistical Confidence Problem

AI predictions work best with large, diverse training datasets. When applied to niche e-commerce stores with unique value propositions or unconventional audiences, prediction accuracy deteriorates. A supplement store targeting elderly customers will have dramatically different interaction patterns than a streetwear brand targeting Gen Z—yet many AI tools apply similar predictive models to both.

The Real UX Problems AI Can’t Diagnose

Heatmaps—whether AI-powered or traditional—excel at showing where interactions happen. They fail spectacularly at revealing why users behave as they do. Understanding user motivation requires qualitative research, not just quantitative interaction data.

Consider common e-commerce UX failures:

Unclear value propositions cause users to abandon sites within seconds. A heatmap might show minimal engagement with your hero section, but it can’t tell you whether users don’t understand what you sell, don’t trust your brand, or simply don’t need your products right now.

Missing trust signals devastate conversion rates, especially for newer stores. AI heatmaps might reveal that users scroll past your checkout button, but they won’t identify that customers can’t find security badges, return policies, or genuine customer reviews that would alleviate purchase anxiety.

Broken information architecture creates navigation confusion that heatmaps visualise but don’t explain. Seeing that users click your category filters repeatedly doesn’t reveal whether filter labels are ambiguous, product sorting is counterintuitive, or search functionality is inadequate.

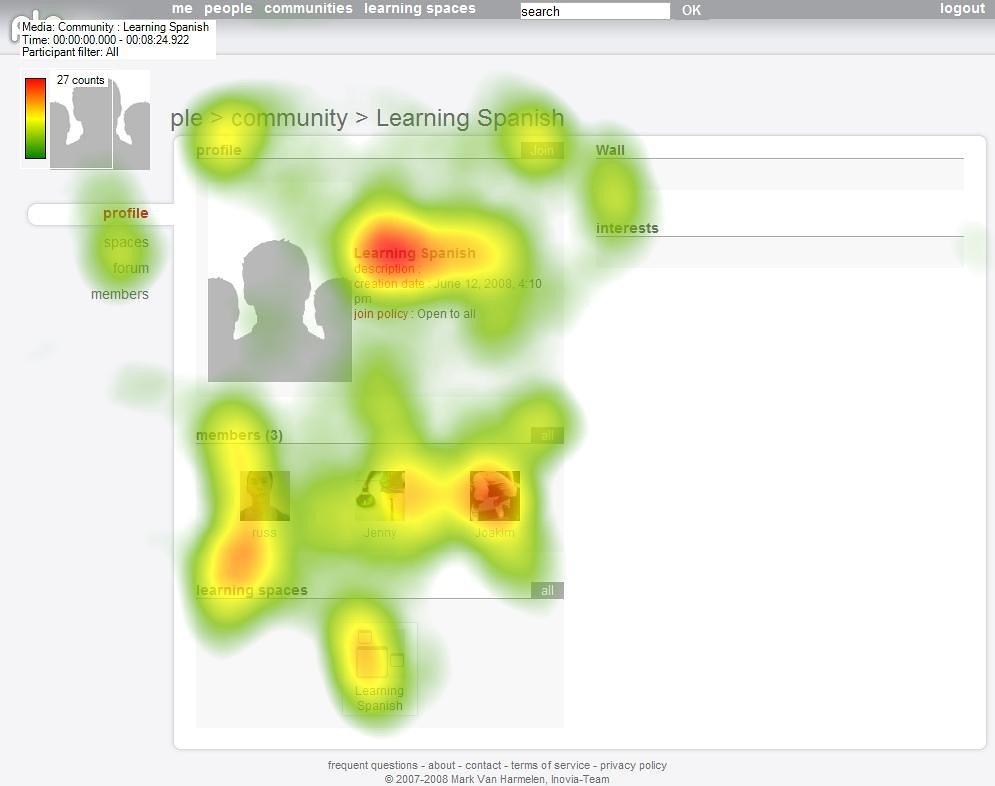

Alt text: Side-by-side comparison of traditional click heatmap and AI-predicted attention heatmap on a product page showing different concentration patterns

The Attribution Illusion

AI heatmaps can identify correlations—elements that receive attention tend to share certain design characteristics—but correlation isn’t causation. Just because high-converting pages show concentrated attention on specific zones doesn’t mean replicating that attention pattern will improve your conversions. Context and content quality matter more than positional data.

When AI Heatmaps Create False Confidence

The visualisation quality of AI-powered tools creates a dangerous psychological effect: data-driven delusion. Sophisticated dashboards with predictive overlays, confidence intervals, and machine learning badges make findings appear more credible than they deserve.

E-commerce teams present these polished heatmaps to stakeholders as evidence of user understanding. Decisions get made based on algorithmic predictions rather than validated user research. The result? Design changes that address symptoms while ignoring root causes.

Consider this common scenario: Your AI heatmap shows users aren’t engaging with product descriptions. The team responds by making descriptions more prominent, adding animations, or repositioning content. Conversions don’t improve because the real problem was that descriptions contained technical jargon that confused shoppers rather than educated them.

This false confidence delays implementing actual solutions:

- Usability testing that reveals comprehension gaps

- Customer interviews that expose trust concerns

- Cart abandonment surveys that identify checkout friction

- Accessibility audits that uncover exclusionary design patterns

The opportunity cost is substantial. While teams iterate on heatmap-derived hypotheses, fundamental UX problems persist unchanged.

The Vanity Metrics Trap

Analytics tools—especially AI-enhanced versions—excel at generating impressive-looking reports filled with metrics that feel important. Scroll depth percentages, attention duration heatmaps, and engagement scores become proxies for actual business outcomes.

But here’s the critical question every CRO consultant must ask: Does knowing that 68% of users scroll past 75% of the page actually inform your conversion strategy?

Vanity metrics share common characteristics:

- They trend upward easily with superficial changes

- They appear in dashboards and reports prominently

- They don’t directly connect to revenue or conversion outcomes

- They’re difficult to act upon without additional context

Time-on-page increases might indicate engaged users reading detailed product information—or confused customers searching desperately for shipping costs. AI heatmaps can’t distinguish between these scenarios.

Heat intensity on product images might represent genuine interest—or users clicking repeatedly because your zoom functionality is broken. The colourful overlay doesn’t reveal user intent or satisfaction.

Metrics That Actually Matter

Conversion-focused teams should prioritise:

- Add-to-cart rate by traffic source

- Cart abandonment rate at specific checkout stages

- Revenue per session segmented by user journey

- Return customer purchase frequency

- Customer acquisition cost versus lifetime value

These metrics connect directly to business outcomes and suggest specific optimisation opportunities. If cart abandonment spikes at the shipping information stage, you’ve identified a discrete problem to investigate—possibly high shipping costs, limited delivery options, or form usability issues.

Breaking Through the UX Foundation Issues

Before AI heatmaps can provide valuable insights, your e-commerce UX foundation must be structurally sound. Think of it like medical diagnostics: an MRI scan only helps if the patient’s vital signs are stable first.

Essential UX foundations include:

Clear information hierarchy that guides users through product discovery, evaluation, and purchase decision-making. Navigation labels should use customer language, not internal jargon. Category structures should reflect how users think about your products, not how your inventory database is organised.

Trust architecture strategically placed throughout the customer journey. Security badges near payment fields, return policies accessible from product pages, authentic customer reviews with photos, and transparent pricing without hidden fees all contribute to conversion optimisation more than any heatmap insight.

Mobile-first responsive design that doesn’t just resize desktop layouts but reimagines the experience for touch interactions, smaller viewports, and on-the-go browsing contexts. AI heatmaps on poorly responsive sites simply document poor experiences without fixing them.

Performance optimisation ensuring pages load quickly, images display properly, and interactions respond immediately. Users won’t engage with elements they never see because they abandoned slow-loading pages.

Alt text: Pyramid diagram with structural UX elements at base, trust and performance in middle layers, and AI analytics tools at the apex

Only after addressing these foundational elements do AI heatmaps provide meaningful optimisation guidance rather than just documenting existing problems.

How CRO Experts Actually Use Heatmap Data

Professional conversion rate optimisation goes far beyond installing analytics tools and reacting to colourful overlays. Effective CRO methodology integrates quantitative data with qualitative research to build comprehensive user understanding.

Experienced CRO consultants follow a structured approach:

1. Establish Baseline Performance

Before examining any heatmap data, document current conversion metrics across user segments, devices, and traffic sources. Identify where the most significant revenue opportunities exist. A 1% improvement in mobile checkout conversion might generate more revenue than a 10% improvement in desktop product page engagement.

2. Form Hypotheses from Business Context

Customer service tickets, sales team feedback, and return reasons often reveal UX problems more accurately than algorithmic predictions. If customers repeatedly ask about sizing information, that’s a content gap—not something heatmaps will surface.

3. Use Heatmaps for Validation, Not Discovery

When you hypothesise that users don’t notice your shipping threshold callout, then check heatmaps to validate whether that element receives attention. This inverts the common workflow of letting heatmaps dictate priorities.

4. Triangulate with Multiple Data Sources

Session recordings show individual user struggles. Form analytics reveal where users abandon fields. Heatmaps show engagement patterns. A/B testing validates which changes actually improve outcomes. No single data source tells the complete story.

Professional CRO teams treat AI heatmaps as one input among many, never as the primary driver of optimisation strategy.

Alternative Research Methods That Actually Work

If AI heatmaps aren’t the conversion optimisation panacea they’re marketed as, what should e-commerce teams prioritise instead?

User Testing with Real Customers

Five users attempting realistic tasks on your site will reveal more actionable UX problems than months of heatmap analysis. Watch someone struggle to find product specifications, abandon their cart due to unclear shipping timeframes, or express confusion about your return policy, and you’ll identify genuine conversion barriers.

Moderated sessions allow you to ask follow-up questions when users exhibit confusion. Unmoderated tools like UserTesting or Maze provide scalable options for Shopify merchants without large research budgets.

On-Site Surveys and Feedback Tools

Strategic micro-surveys at key journey points gather qualitative insights that explain quantitative patterns. Ask cart abandoners why they’re leaving. Ask product page visitors what information they need to make a purchase decision. The responses will surprise you—and often contradict heatmap-based assumptions.

Tools like Hotjar Surveys, Qualaroo, or simple exit-intent popups can collect this feedback without significant technical implementation.

Customer Interview Programs

Regular conversations with recent customers and qualified non-purchasers build deep understanding of decision-making factors, trust concerns, and feature priorities. These interviews reveal the “why” behind behaviour that heatmaps can never explain.

Even informal conversations with 2-3 customers monthly will surface insights that reshape your UX roadmap more effectively than AI predictions.

Analytics Funnel Analysis

Traditional funnel analysis showing drop-off rates between stages (product view → add to cart → checkout → purchase) identifies where to focus optimisation efforts. Segment by device, traffic source, and customer type for actionable insights.

A 40% drop-off at checkout initiation suggests different problems than 40% drop-off at payment submission. This specificity guides investigation far better than general engagement heatmaps.

Alt text: Matrix chart comparing research methods plotting insight quality against implementation complexity, showing user testing and interviews as high-value options

Building a Balanced Analytics Stack

Rather than rejecting AI heatmaps entirely, integrate them appropriately within a comprehensive analytics ecosystem. Each tool serves specific purposes when used correctly.

A balanced stack for e-commerce conversion optimisation includes:

Core analytics platforms (Google Analytics 4, Shopify Analytics) for traffic sources, conversion funnels, and revenue attribution. These establish your baseline metrics and identify where opportunities exist.

Session recording tools (FullStory, Microsoft Clarity) showing actual user behaviour rather than predicted patterns. Watch real sessions where users abandon carts or struggle with navigation to identify specific friction points.

Form analytics (Zuko, Formisimo) revealing where users abandon forms, which fields cause hesitation, and what error messages users encounter. Form optimisation often delivers more conversion improvement than any other single change.

Traditional heatmaps (Hotjar, Crazy Egg) based on actual clicks and scrolls rather than AI predictions. Use these to validate hypotheses about element visibility and interaction patterns.

A/B testing platforms (Optimizely, VWO, Google Optimize) to validate that proposed changes actually improve conversion rates. Testing converts opinions and data into proven optimisations.

Customer feedback tools for qualitative insights that explain quantitative patterns. No amount of AI can replace hearing customers describe their decision-making processes.

Only after establishing this foundation should you consider adding AI-enhanced analytics for pattern recognition across large datasets or predictive modelling for high-traffic sites.

Making Data-Driven Decisions That Actually Drive Conversions

The goal isn’t collecting more data—it’s making better decisions that increase revenue. True data-driven optimisation connects insights to action to outcomes.

Effective decision-making frameworks include:

PIE Prioritisation Method

Score potential optimisations across three dimensions:

- Potential: How much improvement could this change generate?

- Importance: How much traffic/revenue does this page/element affect?

- Ease: How quickly can we implement and test this change?

Prioritise changes that score highly across all three dimensions rather than chasing every heatmap anomaly.

ICE Scoring Framework

Similar to PIE but emphasises:

- Impact: Expected effect on conversion rate

- Confidence: How certain are we this will work?

- Ease: Implementation complexity

Both frameworks prevent teams from pursuing low-impact optimisations just because AI tools highlighted them with pretty visualisations.

The “Why” Test

Before acting on any analytical insight, ask: “Why would this change improve conversions?” If your answer relies solely on “the heatmap showed low engagement,” that’s insufficient. Find the underlying user need, barrier, or motivation that your change addresses.

Strong answers connect to human psychology, established UX principles, or validated customer feedback. “Users don’t trust our checkout because we don’t display security badges prominently” is actionable. “Users don’t engage with this section” is descriptive but not diagnostic.

Quick Takeaways

- AI heatmaps visualise problems but don’t explain root causes—they’re descriptive tools, not diagnostic solutions for broken UX

- Predictive heatmaps make assumptions based on pattern recognition that may not apply to your specific audience or unconventional design

- Vanity metrics like scroll depth and attention duration look impressive in reports but don’t directly connect to conversion outcomes

- Foundational UX problems (trust architecture, information hierarchy, mobile responsiveness) must be fixed before analytics tools provide meaningful guidance

- Qualitative research methods (user testing, customer interviews, on-site surveys) reveal the “why” behind behaviour that quantitative tools can’t explain

- Professional CRO uses heatmaps for hypothesis validation rather than letting them dictate optimisation priorities

- Balanced analytics stacks integrate multiple tools—session recordings, form analytics, A/B testing, and customer feedback alongside heatmaps

Conclusion: Tools Don’t Replace Strategy

The e-commerce analytics industry will continue producing increasingly sophisticated AI-powered tools that promise effortless conversion optimisation. The visualisations will become more impressive, the machine learning more advanced, and the marketing more persuasive. But fundamental conversion rate optimisation principles remain unchanged: understand your customers deeply, remove barriers to purchase, build trust consistently, and test changes systematically.

AI heatmaps UX optimisation can support this process when implemented thoughtfully. They help validate hypotheses, identify patterns across large datasets, and communicate findings to stakeholders visually. They fail dramatically when treated as substitutes for genuine user research, strategic thinking, and foundational UX improvements.

For Shopify store owners and CRO consultants, the path forward isn’t abandoning analytics tools—it’s using them appropriately within comprehensive optimisation methodologies. Invest your budget in user research before expensive AI platforms. Fix obvious trust deficits and navigation problems before obsessing over attention heatmaps. Test changes that address real customer barriers rather than optimising for algorithmic predictions.

The stores that achieve sustainable conversion improvements don’t have better analytics tools—they have clearer understanding of customer needs, stronger UX foundations, and disciplined testing processes. The data serves the strategy, not the other way around.

Ready to move beyond vanity metrics? Start by interviewing five recent customers about their purchase decision process. Ask what nearly stopped them from buying. Ask what information they wished they’d found more easily. Those conversations will reveal more actionable conversion opportunities than any AI heatmap ever could.

Frequently Asked Questions

Q: Are AI heatmaps completely useless for conversion optimisation?

A: No, but they’re often misused. AI heatmaps work best for hypothesis validation on high-traffic sites with solid UX foundations. They become problematic when teams use them as primary research tools or let algorithmic predictions override customer feedback and usability testing.

Q: How much traffic do I need before heatmap data becomes reliable?

A: Traditional heatmaps require 2,000-3,000 page views minimum for statistical significance, though more is better. AI predictive heatmaps claim to work with less data but introduce accuracy concerns. Focus on high-traffic pages (homepage, key product pages, checkout) where sample sizes naturally accumulate faster.

Q: What’s the single most important alternative to AI heatmaps for improving conversions?

A: Customer interviews and user testing sessions. Five moderated sessions where you watch real users attempt realistic tasks will reveal more conversion barriers than months of heatmap analysis. Understanding why users behave as they do matters more than just documenting what they do.

**Q: Should I